Stripe and ElevenLabs to Power Voice-Driven Merchant Operations - Proof of Concept

This writeup explains the Terminal management operating system powered by Stripe, ElevenLabs, and conversational AI agents.

It’s a project that I am no longer working on, and I figured I would share my notes :)

Why Terminal Management OS?

Imagine this: A merchant scales there sales efforts across various physical locations, with the support of a worker that understand their products, story, can take payments, bookings, work in various languages etc. Zero ramp time compared to a human:

“Our product contains X allergens and is best for Y skin type. What’s your skin type?”

So, we asked ourselves: What if a knowledgable, adaptable voice driven system was a first-class feature in the payment and shopping experience?

“When would be best to book X, ah sorry that time isn’t available we can book time B or C instead”

“That product is out of stock here, we have it available in our Sheffield warehouse. How about we get that delivered to you? Enter your address in the keypad below and make a payment to secure delivery.”

That’s the premise behind the Terminal Management Operating System.

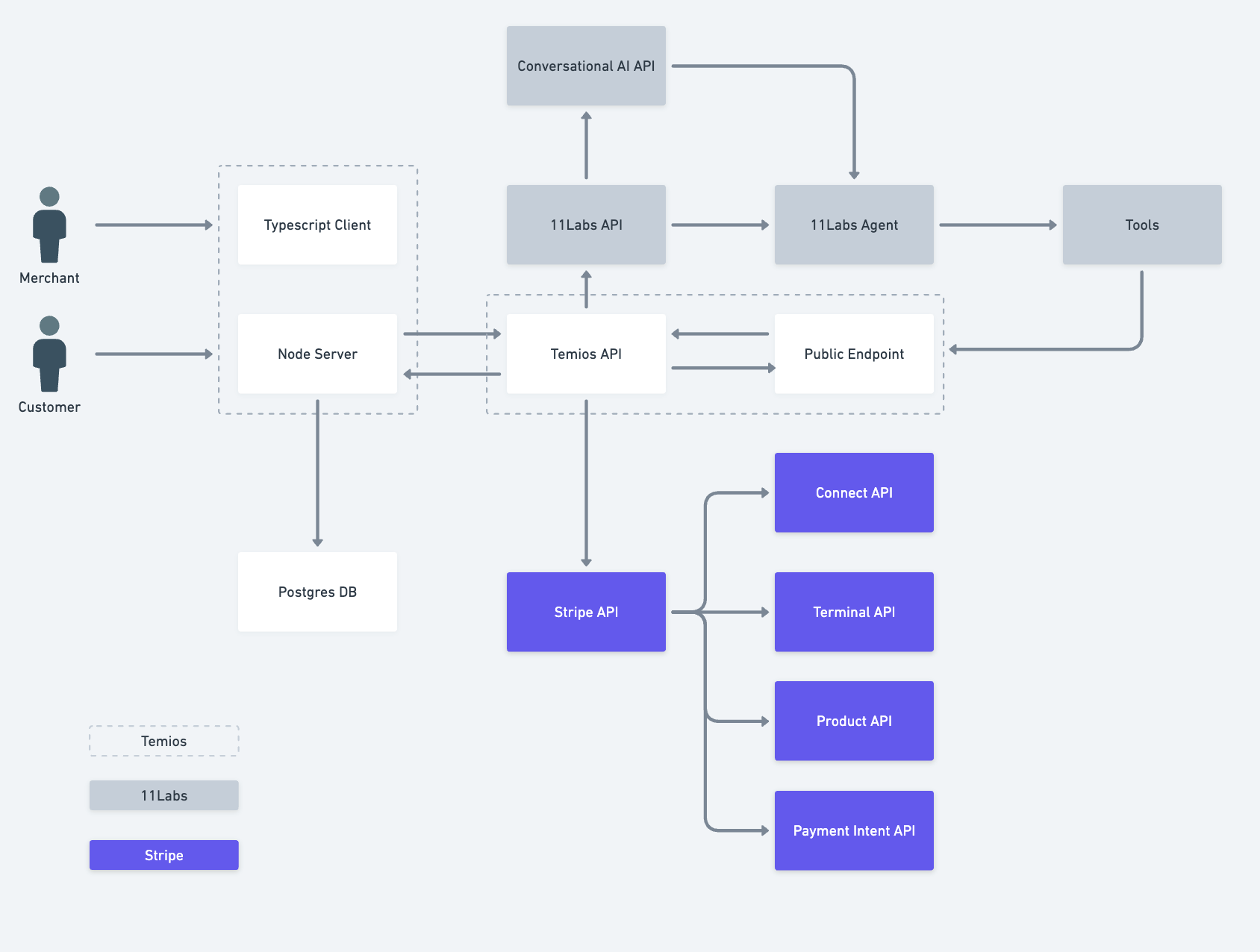

System Architecture: Stripe + ElevenLabs + AI Agent

Our stack connects Stripe for payments, ElevenLabs for voice & Agent that drives interactions. Very tightly coupled to increase ship speed.

The image above describes a very high level level architecture for the project.

I will break down each segment, with corresponding links, images and documentation.

- Stripe API

- 11 Labs API

- Terminal Management Operating System API

1. Stripe API Integration (with Connect + Terminal)

We use Stripe as the financial and payment backbone of Temi OS. Here’s how:

Key Capabilities Used:

-

Product creation — Stored both in our database and in Stripe

- Coupled between Stripe and the Database

- Used in Stripe for purchases

- Used in the Database for Stock management and association with KnowledgeBases

- https://docs.stripe.com/products-prices/how-products-and-prices-work#products

-

Terminal location setup — For merchant hardware

- Terminals In Stripe have a Location and corresponding Readers (The physical terminal, this can be a Hardware terminal, like I have used in the code or a Tap to pay android of iOS terminal)

- Terminal suite: Location → N readers

- https://docs.stripe.com/terminal

- I have used a Server Driven terminal Integration

- https://docs.stripe.com/terminal/designing-integration#use-it-in-a-server-driven-integration

-

Payment intents — Card-present transactions and metadata

- A payment intent describes the payment primitive that is used to create any kind of payment within Stripe. You can define various parameters that determine how it behaves.

- When making in person terminal payments they are considered Card present payments and there is corresponding Data and rules from the Networks (Visa, Mastercard, Amex) etc that effect reporting data, transaction costs etc

- These are used in Conjunction with the terminal and connect

- https://docs.stripe.com/payments/payment-intents Overview

- https://docs.stripe.com/api/payment_intents

-

Stripe Connect - This is the mechanism that turns Terminal Management Operating System into a Multi-party platform and gives the ability to monetise on transactions .

- This is the same mechanism used by Shopify, Deliveroo, Amazon, etc for multi-party payments.

- https://docs.stripe.com/connect

-

High level overview: https://stripe.com/connect

-

What Capabilities within Connect do we care about?

- Merchant onboarding

- How do we get new merchants on the system to take payments a manner that is quick, compliant and easily scaled

- https://docs.stripe.com/connect/onboarding#embedded-onboarding

- Application fees — We collect a percentage per transaction

- Merchant onboarding

2. ElevenLabs Conversational AI Integration

We integrate with the ElevenLabs Conversational AI API to generate dynamic, real-time voice responses.

Highly recommend reading through:

https://elevenlabs.io/docs/conversational-ai/guides/quickstarts/next-js

To develop high level understanding

Why Conversational AI?

ElevenLabs agents can make tool calls, meaning they can hit our internal APIs to retrieve or act on business data.

In our case, we defined a few tools that the agent can use:

I have defined how to access these tools in the system prompt as well.

Example Conversation

Tech Stack & Dependencies

| Tool | Role |

|---|---|

| Next.js (v15.1.7) | Fullstack framework |

| React (v19.0.0) | Frontend UI |

| TypeScript | Type safety |

| Prisma (v6.4.1) | ORM for PostgreSQL |

| Stripe (v13.4.0) | Payments, Terminal, Connect |

| NextAuth (v5.0.0-beta.25) | Authentication |

| Tailwind CSS | Styling |

| Fuse.js | Fuzzy product search |

| date-fns | Date utility helpers |

| ngrok | Local webhook testing |

| ElevenLabs | Voice generation + AI agent integration |